Kafka .10 Client Reading Old Message Format

Kafka Clients

Kafka clients are created to read data from and write data to the Kafka system. Clients can be producers, which publish content to Kafka topics. Clients can be subscribers, which read content from Kafka topics.

Commands for Customer Interactions

Assuming you lot have Kafka running on your cluster, here are some commands that describe the typical steps you would need to practice Kafka functionality:

- Create a topic

-

kafka-topics --create --zookeeper zkinfo --replication-cistron 1 --partitions ane --topic exam

where the ZooKeeper connect string zkinfo is a comma-separated list of the Zookeeper nodes in host: port format.

- Validate the topic was created successfully

-

kafka-topics --list --zookeeper zkinfo

- Produce letters

-

The following command tin exist used to publish a message to the Kafka cluster. After the command, each typed line is a message that is sent to Kafka. Later on the last message, send an EOF or stop the command with Ctrl-D.

$ kafka-console-producer --broker-list kafkainfo --topic test My beginning message. My second message. ^D

where kafkainfo is a comma-separated list of the Kafka brokers in host:port format. Using more than i makes sure that the command can find a running broker.

- Swallow messages

-

The post-obit command tin can be used to subscribe to a message from the Kafka cluster.

kafka-console-consumer --bootstrap-server kafkainfo --topic test --from-beginning

The output shows the same messages that y'all entered during your producer.

- Prepare a ZooKeeper root node

-

It's possible to use a root node (chroot) for all Kafka nodes in ZooKeeper by setting a value for zookeeper.chroot in Cloudera Director. Append this value to the end of your ZooKeeper connect cord.

Prepare chroot in Cloudera Manager:

zookeeper.chroot=/kafka

Form the ZooKeeper connect string as follows:

--zookeeper zkinfo/kafka

If you set chroot and and then use only the host and port in the connect string, yous'll see the following exception:

InvalidReplicationFactorException: replication cistron: iii larger than available brokers: 0

Kafka Producers

Kafka producers are the publishers responsible for writing records to topics. Typically, this ways writing a program using the KafkaProducer API. To instantiate a producer:

KafkaProducer<String, String> producer = new KafkaProducer<>(producerConfig);

Well-nigh of the of import producer settings, and mentioned beneath, are in the configuration passed by this constructor.

Serialization of Keys and Values

For each producer, there are 2 serialization properties that must exist set, central.serializer (for the key) and value.serializer (for the value). You lot tin can write custom code for serialization or utilise one of the ones already provided by Kafka. Some of the more commonly used ones are:

- ByteArraySerializer: Binary data

- StringSerializer: String representations

Managing Record Throughput

There are several settings to control how many records a producer accumulates before really sending the data to the cluster. This tuning is highly dependent on the data source. Some possibilities include:

- batch.size: Combine this fixed number of records before sending data to the cluster.

- linger.ms: Ever look at least this amount of time before sending data to the cluster; and then send notwithstanding many records has accumulated in that fourth dimension.

- max.asking.size: Put an absolute limit on information size sent. This technique prevents network congestion caused by a single transfer asking containing a large amount of information relative to the network speed.

- compression.type: Enable compression of data being sent.

- retries: Enable the client for retries based on transient network errors. Used for reliability.

Acknowledgments

The full write path for records from a producer is to the leader partition and so to all of the follower replicas. The producer can control which betoken in the path triggers an acquittance. Depending on the acks setting, the producer may await for the write to propagate all the way through the system or only wait for the earliest success point.

Valid acks values are:

- 0: Do not wait for whatsoever acknowledgment from the partition (fastest throughput).

- 1: Wait just for the leader partition response.

- all: Look for follower partitions responses to see minimum (slowest throughput).

Sectionalisation

In Kafka, the partitioner determines how records map to partitions. Apply the mapping to ensure the order of records within a partition and manage the balance of letters across partitions. The default partitioner uses the entire key to determine which segmentation a message corresponds to. Records with the same key are e'er mapped to the same partition (assuming the number of partitions does not change for a topic). Consider writing a custom partitioner if yous take information about how your records are distributed that tin can produce more efficient load balancing across partitions. A custom partitioner lets you have reward of the other data in the record to control partitioning.

If a partitioner is not provided to the KafkaProducer, Kafka uses a default partitioner.

The ProducerRecord class is the actual object candy by the KafkaProducer. It takes the post-obit parameters:

- Kafka Record: The key and value to exist stored.

- Intended Destination: The destination topic and the specific partitioning (optional).

Kafka Consumers

Kafka consumers are the subscribers responsible for reading records from one or more topics and one or more partitions of a topic. Consumers subscribing to a topic can happen manually or automatically; typically, this means writing a programme using the KafkaConsumer API.

To instantiate a consumer:

KafkaConsumer<String, Cord> kafkaConsumer = new KafkaConsumer<>(consumerConfig);

The KafkaConsumer form has two generic blazon parameters. But as producers can send information (the values) with keys, the consumer can read data by keys. In this example both the keys and values are strings. If you define different types, you need to define a deserializer to accommodate the alternate types. For deserializers you need to implement the org.apache.kafka.common.serialization.Deserializer interface.

The most of import configuration parameters that we demand to specify are:

- bootstrap.servers: A listing of brokers to initially connect to. List 2 to three brokers; you don't needed to listing the full cluster.

- group.id: Every consumer belongs to a group. That way they'll share the partitions of a topic.

- key.deserializer/value.deserializer: Specify how to catechumen the Coffee representation to a sequence of bytes to ship data through the Kafka protocol.

Subscribing to a topic

Subscribing to a topic using the subscribe() method call:

kafkaConsumer.subscribe(Collections.singletonList(topic), rebalanceListener);

Hither we specify a listing of topics that we want to eat from and a 'rebalance listener.' Rebalancing is an important part of the consumer'due south life. Whenever the cluster or the consumers' state changes, a rebalance volition be issued. This will ensure that all the partitions are assigned to a consumer.

After subscribing to a topic, the consumer polls to run across if in that location are new records:

while (true) { data = kafkaConsumer.poll(); // practise something with 'data' } The poll returns multiple records that tin be candy by the customer. Later processing the records the client commits offsets synchronously, thus waiting until processing completes before standing to poll.

The last important point is to relieve the progress. This can be done past the commitSync() and commitAsync() methods respectively.

PLACEHOLDER FOR CODE SNIPPET

Auto commit is not recommended; manual commit is appropriate in the bulk of use cases.

Groups and Fetching

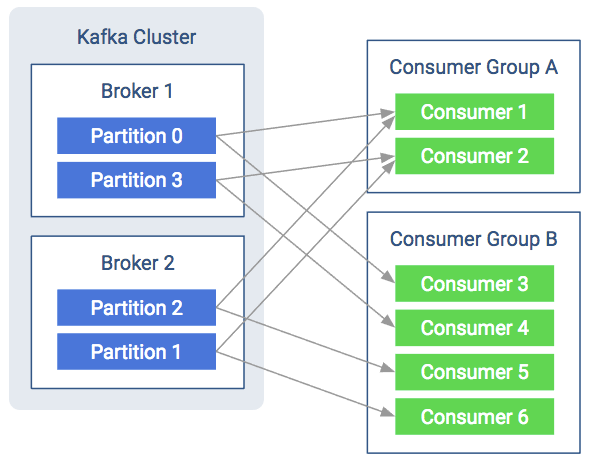

Kafka consumers are commonly assigned to a group. This happens statically by setting the group.id configuration property in the consumer configuration. Consuming with groups will effect in the consumers balancing the load in the grouping. That means each consumer volition accept their fair share of partitions. As well it can never be more consumers than partitions as that way there would be idling consumers.

As shown in the figure below, both consumer groups share the partitions and each partitioning multicasts messages to both consumer groups. The consumers pull messages from the banker instead of the banker periodically pushing what is bachelor. This helps the consumer as it won't exist overloaded and it can query the banker at its own speed. Furthermore, to avoid tight looping, it uses a so chosen "long-poll". The consumer sends a fetch request to poll for data and receives a reply just when enough data accumulates on the banker.

Consumer Groups and Fetching from Partitions

Protocol betwixt Consumer and Banker

This section details how the protocol works, what letters are going on the wire and how that contributes to the overall beliefs of the consumer. When discussing the internals of the consumers, there are a couple of basic terms to know:

- Heartbeat

- When the consumer is alive and is part of the consumer group, information technology sends heartbeats. These are short periodic messages that tell the brokers that the consumer is alive and everything is fine.

- Session

- Often one missing heartbeat is not a big deal, only how do y'all know if a consumer is not sending heartbeats for long enough to indicate a problem? A session is such a fourth dimension interval. If the consumer didn't ship any heartbeats for longer than the session, the broker tin consider the consumer dead and remove it from the group.

- Coordinator

- The special broker which manages the group on the banker side is called the coordinator. The coordinator handles heartbeats and assigns the leader. Every group has a coordinator that organizes the startup of a consumer group and help whenever a consumer leaves the group.

- Leader

- The leader consumer is elected by the coordinator. Its job is to assign partitions to every consumer in the group at startup or whenever a consumer leaves or joins the group. The leader holds the assignment strategy, it is decoupled from the broker. That means consumers can reconfigure the division assignment strategy without restarting the brokers.

Startup Protocol

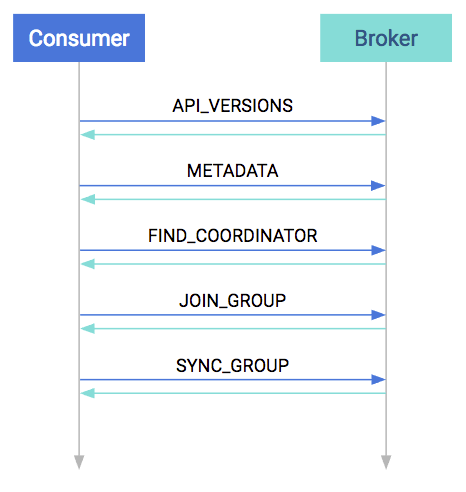

Every bit mentioned before, the consumers are working usually in groups. So a major role of the startup procedure is spent with figuring out the consumer group.

At startup, the showtime step is to match protocol versions. It is possible that the broker and the consumer are of unlike versions (the banker is older and the consumer is newer, or vice versa). This matching is done by the API_VERSIONS request.

Startup Protocol

The next step is to collect cluster information, such equally the addresses of all the brokers (prior to this point we used the bootstrap server as a reference), partition counts, and sectionalisation leaders. This is done in the METADATA request.

After acquiring the metadata, the consumer has the data needed to join the group. Past this time on the banker side, a coordinator has been selected per consumer group. The consumers must detect their coordinator with the FIND_COORDINATOR request.

Later on finding the coordinator, the consumer(due south) are fix to join the group. Every consumer in the grouping sends their ain member-specific metadata to the coordinator in the JOIN_GROUP request. The coordinator waits until all the consumers have sent their asking, and so assigns a leader for the group. At the response plus the nerveless metadata are sent to the leader, so it knows well-nigh its group.

The remaining step is to assign partitions to consumers and propagate this state. Similar to the previous asking, all consumers send a SYNC_GROUP request to the coordinator; the leader provides the assignments in this request. Subsequently it receives the sync request from each group member, the coordinator propagates this member state in the response. By the end of this step, the consumers are ready and can start consuming.

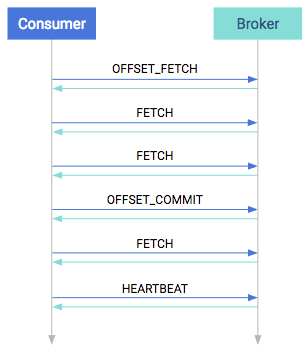

Consumption Protocol

When consuming, the start step is to query where should the consumer get-go. This is done in the OFFSET_FETCH asking. This is not mandatory: the consumer tin can besides provide the first manually. After this, the consumer is free to pull data from the broker. Data consumption happens in the FETCH requests. These are the long-pull requests. They are answered merely when the broker has enough data; the request tin exist outstanding for a longer period of time.

Consumption Protocol

From fourth dimension to time, the awarding has to either manually or automatically salvage the offsets in an OFFSET_COMMIT asking and send heartbeats as well in the HEARTBEAT requests. The first ensures that the position is saved while the latter ensures that the coordinator knows that the consumer is alive.

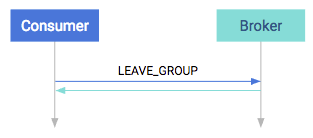

Shutdown Protocol

The terminal step when the consumption is done is to shut downwards the consumer gracefully. This is washed in 1 single step, chosen the LEAVE_GROUP protocol.

Shutdown Protocol

Rebalancing Partitions

Y'all may notice that there are multiple points in the protocol betwixt consumers and brokers where failures tin can occur. There are points in the normal operation of the organisation where you lot demand to change the consumer group assignments. For example, to swallow a new partitioning or to answer to a consumer going offline. The process or responding to cluster information irresolute is called rebalance. Information technology can occur in the following cases:

- A consumer leaves. It tin can exist a software failure where the session times out or a connectedness stalls for too long, but it tin besides be a svelte shutdown.

- A consumer joins. It can be a new consumer simply an old one that but recovered from a software failure (automatically or manually).

- Partitioning is adjusted. A partition tin simply become offline considering of a broker failure or a segmentation coming back online. Alternatively an administrator can add or remove partitions to/from the broker. In these cases the consumers must reassign who is consuming.

- The cluster is adapted. When a broker goes offline, the partitions that are lead past this banker will exist reassigned. In plow the consumers must rebalance so that they consume from the new leader. When a broker comes back, and so eventually a preferred leader election happens which restores the original leadership. The consumers must follow this change every bit well.

On the consumer side, this rebalance is propagated to the client via the ConsumerRebalanceListener interface. It has two methods. The first, onPartitionsRevoked, volition be invoked when whatsoever partition goes offline. This call happens before the changes would reflect in any of the consumers, then this is the chance to save offsets if manual get-go commit is used. On the other mitt onPartitionsAssigned is invoked after partition reassignment. This would allow for the programmer to detect which partitions are currently assigned to the electric current consumer. Complete examples tin be found in the development section.

Consumer Configuration Properties

At that place are some very important configurations that any user of Kafka must know:

- heartbeat.interval.ms: The interval of the heartbeats. For example, if the heartbeat interval is fix to three seconds, the consumer sends a brusque heartbeat message to the broker every iii seconds to indicate that information technology is alive.

- session.timeout.ms: The consumer tells this timeout to the coordinator. This is used to control the heartbeats and remove the expressionless consumers. If it'southward set to ten seconds, the consumer can miss sending 2 heartbeats, assuming the previous heartbeat setting. If we increment the timeout, the consumer has more room for delays but the broker notices lagging consumers later.

- max.poll.interval.ms: It is a very important particular: the consumers must maintain polling and should never exercise long-running processing. If a consumer is taking too much time betwixt two polls, information technology will be detached from the consumer grouping. Nosotros can tune this configuration according to our needs. Note that if a consumer is stuck in processing, it will exist noticed later if the value is increased.

- request.timeout.ms: By and large every request has a timeout. This is an upper bound that the client waits for the server's response. If this timeout elapses, then retries might happen if the number of retries are not exhausted.

Retries

In Kafka retries typically happen on only for certain kinds of errors. When a retriable fault is returned, the clients are constrained past ii facts: the timeout period and the backoff period.

The timeout period tells how long the consumer can retry the functioning. The backoff flow how often the consumer should retry. In that location is no generic approach for "number of retries." Number of retries are usually controlled by timeout periods.

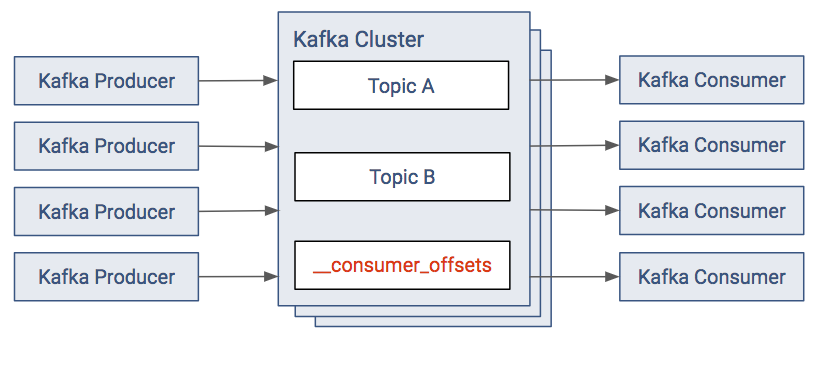

Kafka Clients and ZooKeeper

The default consumer model provides the metadata for offsets in the Kafka cluster. There is a topic named __consumer_offsets that the Kafka Consumers write their offsets to.

Kafka Consumer Dependencies

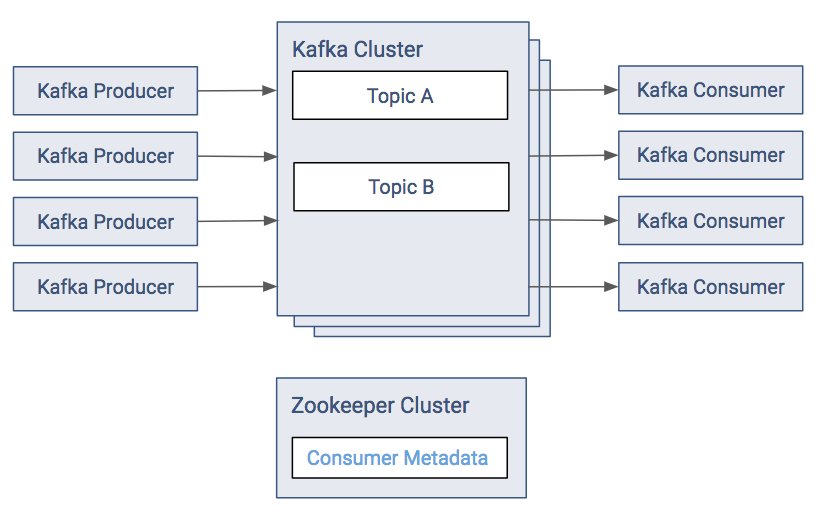

In releases earlier version 2.0 of CDK Powered by Apache Kafka, the same metadata was located in ZooKeeper. The new model removes the dependency and load from Zookeeper. In the old approach:

- The consumers relieve their offsets in a "consumer metadata" section of ZooKeeper.

- With most Kafka setups, there are oft a large number of Kafka consumers. The resulting client load on ZooKeeper tin can exist meaning, therefore this solution is discouraged.

Kafka Consumer Dependencies (Old Arroyo)

Kafka .10 Client Reading Old Message Format

Source: https://docs.cloudera.com/documentation/enterprise/6/6.3/topics/kafka_clients.html

0 Response to "Kafka .10 Client Reading Old Message Format"

Post a Comment